In Azure, there are several ways to execute commands on a running virtual machine aside from using RDP or SSH to remote in and open a shell. One of the common ways to accomplish this in Azure is through the Run Command feature that is present on all Azure Virtual Machines. Since this is commonly used by pentesters, it is often one of the logs that I’ve seen that an alert is actually configured around. There are several other ways of execution however, which I will explain in this article as well as any associated defensive information. It should be noted that all techniques here will run as SYSTEM/root privileges by default unless otherwise configured. This article is heavily biased towards Windows, but the techniques still apply to Linux (with some changes).

Run Command

The Run Command feature on an Azure VM works differently than the other methods that will be discussed later, as the Run Command feature uses an agent instead of an extension. This agent is automatically installed once the VM is provisioned in Azure on both Linux and Windows.

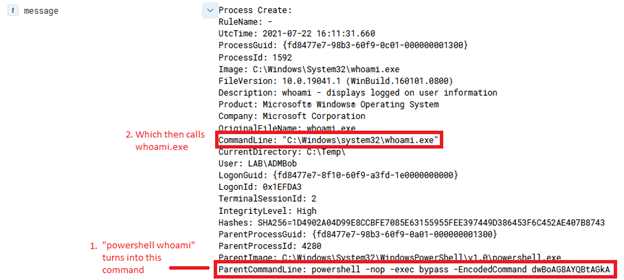

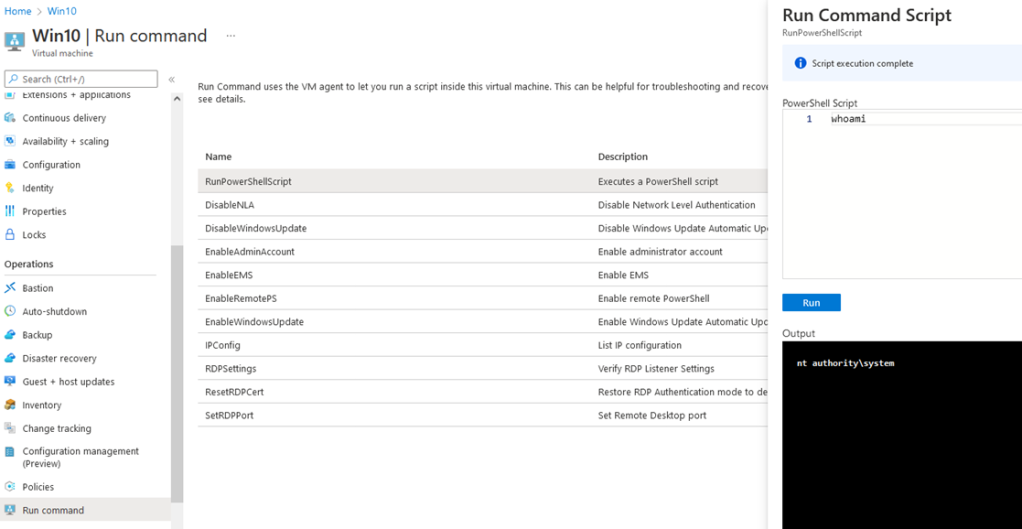

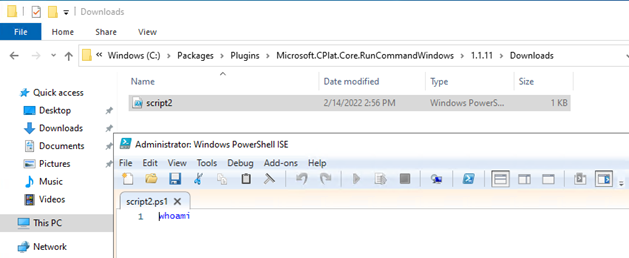

Via the portal, there’s several pre-configured scripts to run and one (RunPowerShellScript) that lets you define your own command. The command is taken and uploaded to the VM via the agent in a .PS1 format and stored on disk at C:\Packages\Plugins\Microsoft.CPlat.Core.RunCommandWindows\1.1.11\Downloads, as shown in figure 2.

This can lead to some fun things, such as transferring an entire binary over for execution, which is done via the ‘Invoke-AzureRunProgram‘ command in PowerZure. Run Command can also be utilized via Az PowerShell, Azure REST API, or the Azure CLI. For example, for Az PowerShell the command is Invoke-AzVMRunCommand. From an operational security standpoint, this is arguably the loudest possible way to execute a command on a VM in Azure. I would compare it to cmd.exe /c level of noisy-ness and simply because it’s the most well known way to execute a command on a VM.

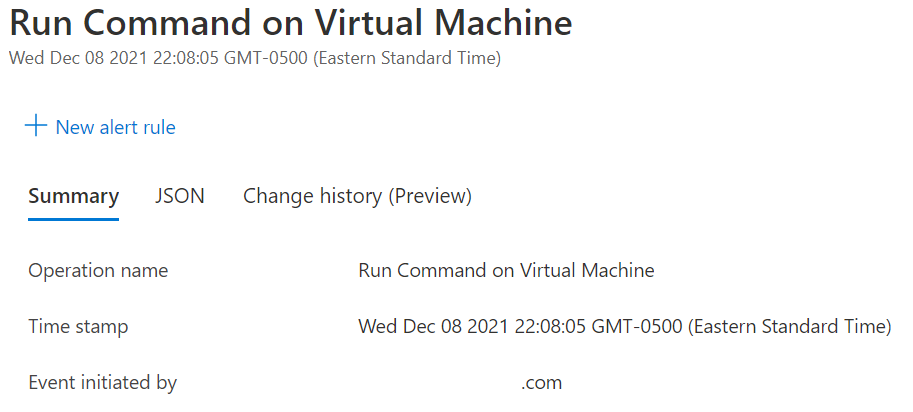

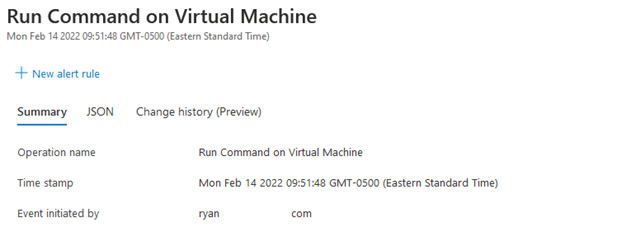

As a Defender, the log to look for in the Azure Activity log is ‘Run Command on Virtual machine’, as shown in figure 3.

Unfortunately, the key missing component in this log is the actual command run, so if an alert is purely triggered off this log, it is up to the responder to look on disk and determine if that command (which is in the .PS1 file) is benign or malicious. If the script is deleted from that folder, I suggest escalating the ticket to someone with forensic and IR knowledge as that would be a key indicator of an adversary “cleaning up” after themselves since the folder that stores the scripts is not cleaned out after reboots or updates.

Custom Script Extension

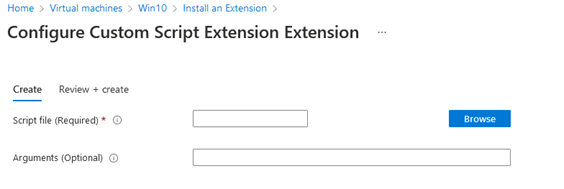

The second technique for command execution is Custom Script Extension (CSE). This is also a relatively well known technique, however I would say it’s seldom used and leaves less of a footprint. In the portal, CSE is an extension that works by uploading a script to the extension, which is then deployed to the VM.

The script that is deployed is then kept on disk at C:\Packages\Plugins\Microsoft.Compute.CustomScriptExtension\1.10.12\Downloads\.

The problem with using the portal for CSE execution, is the script must come from an Azure Storage Account, which means the account in control needs access to that resource as well. In addition, another log will be generated once something is uploaded to the storage account, so using CSE from the portal is very op-sec unfriendly. op-sec improves slightly when utilizing the command line, such as Set-AzVMCustomScriptExtension, which allows a script to be uploaded from an arbitrary URI such as Github. CSE really shines when utilized via the REST API, which allows any command via a PATCH request. The CSE extension works one way, meaning it will not return data once the script/command is sent. Instead, the status of the extension can be queried which will show the output of the script/command. This is accomplished in PowerZure with the new Invoke-AzureVMCustomScriptExtension function.

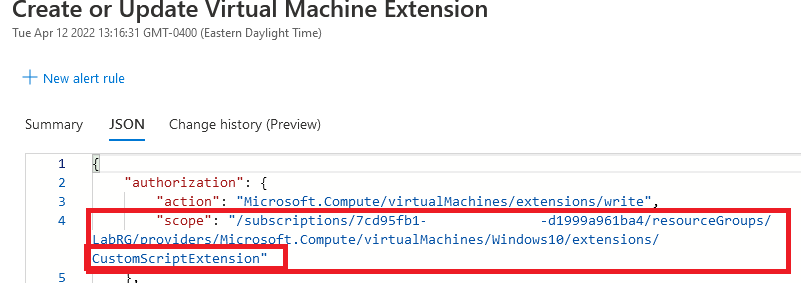

CSE will always generate a log named “Create or Update Virtual Machine Extension”. Once again, unfortunately the command/script contents are not included in the log and requires access to the host to retrieve the contents. It is rare to see CSE used legitimately, especially after a VM is provisioned, however VM extensions in general are commonly used. It is imperative as a defender to not simply alert on “Create or Update Virtual Machine Extension” and instead, the log JSON data will specify that the CSE extension was used, which should be a key piece of correlation data when configuring the alert.

DesiredConfigurationState

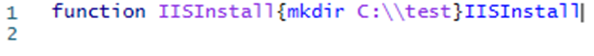

DesiredConfigurationState (DSC) is similar to Ansible, but is a tool within PowerShell that allows a host to be setup through code. DSC has its own extension in Azure which allows the upload of configuration files. DSC configuration files are quiet picky when it comes to syntax, however the DSC extension is very gullible and will blindly execute any command anything as long as it follows a certain format, as shown in figure 5.

This can be done via the Az PowerShell function Publish-AzvmdscConfiguration. The DSC extension requires a .PS1 with a function and packaged in a .zip file. Since this actually isn’t correct DSC syntax, the extension status will read as “failure”, however the code will be executed. The issue with this is that there is no output of the command, as the status is overwritten with the failure message.

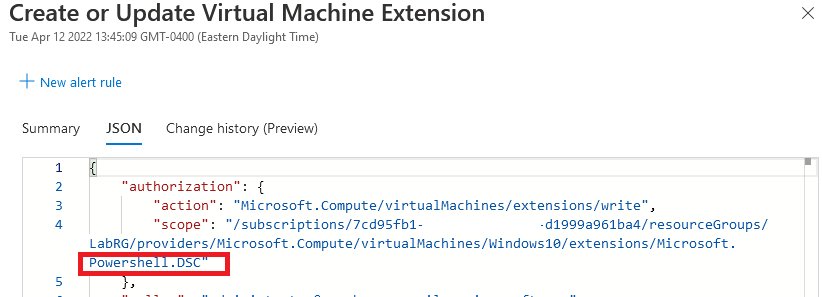

From a Defensive perspective, this generates the same logs as CSE since it’s an extension, so the “Create or Update Virtual Machine Extension” log is what to look for with the CSE extension, as shown in figure 7.

The script is stored on disk at C:\Packages\Plugins\Microsoft.Compute.DesiredStateConfiguration.

VM Application Definitions

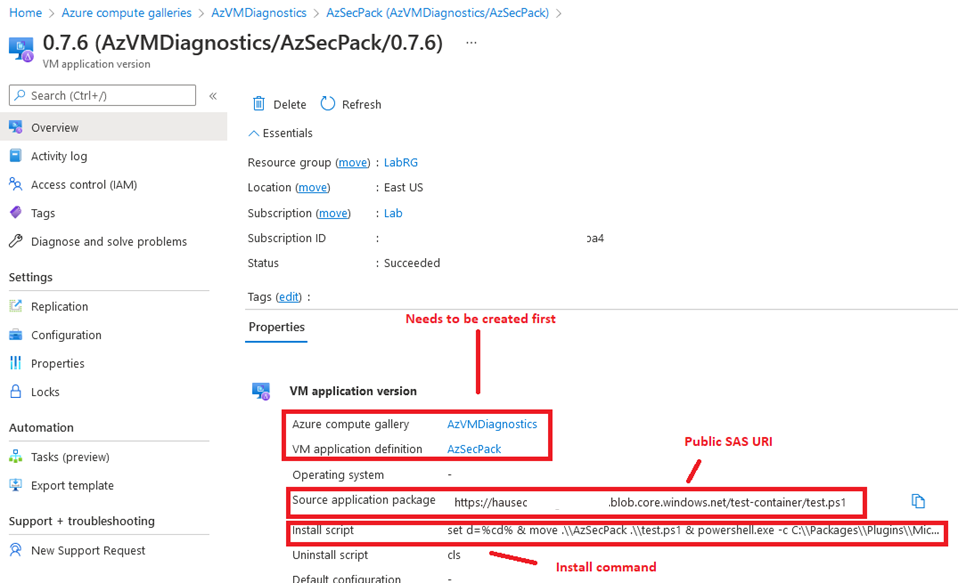

A new feature to Azure, the VM Applications resource is a way to deploy versioned applications repeatably to an Azure VM. For example, if you create a program and deploy it to all Azure VMs as version 1.0, once you update the program to 1.1, you can use the same VM Application to create another definition and push the update out to any VM. This can quite easily be abused by adversaries for obvious implications. Being able to push out an application to a VM means it’s another avenue for code execution. This method’s drawback is that setting up the Application Definition requires a few steps, but can be accomplished using the New-AzGalleryApplication and New-AzGalleryApplicationVersion cmdlets in Az PowerShell.

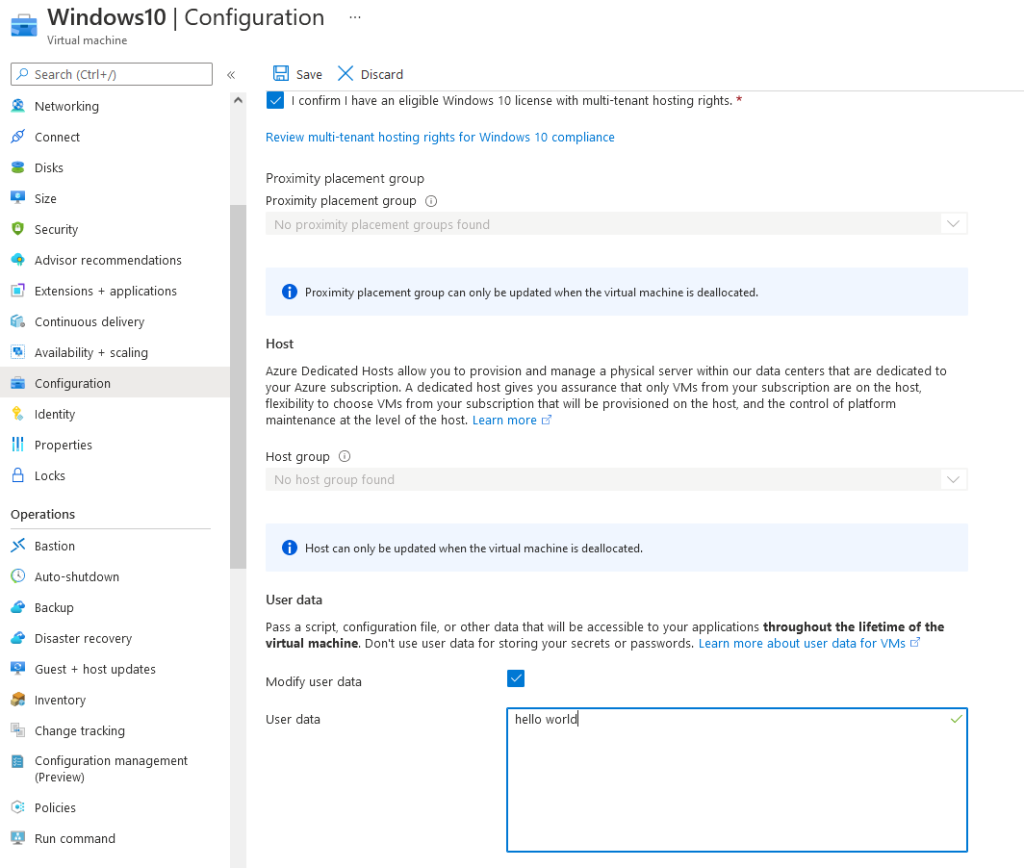

This technique works by utilizing an extension “VMAppExtension” which is automatically installed when applying an application to a VM. The extension downloads the file from the URI to disk as the name of the Application exactly, meaning if application name is ‘AzApplication’, the file on disk is also called ‘AzApplication’ with no extension. This requires the “ManageActions” field in the REST API call to be configured to rename the application with the appropriate extension. If you don’t want to run an entire application, arbitrary PowerShell commands can be run by also abusing the ManageActions field in the same REST API method or also through the Azure Portal. Once set up, the definition will be similar to figure 8.

This execution technique is unfortunately slow (about 3-4 minutes to execute an app or command), however with it being relatively new I would be surprised if there’s any specific detections written.

Since it’s an extension that ultimately does the execution, a copy of the application is located at C:\Packages\Plugins\Microsoft.CPlat.Core.VMApplicationManagerWindows\1.0.4\Downloads\ and the status of the execution is kept in C:\Packages\Plugins\Microsoft.CPlat.Core.VMApplicationManagerWindows\1.0.4\Status\.

With this technique utilizing the VM Application resource and the actual VM resource, logs are generated for both as “Create or Update Virtual Machine Extension”.

Hybrid Worker Groups

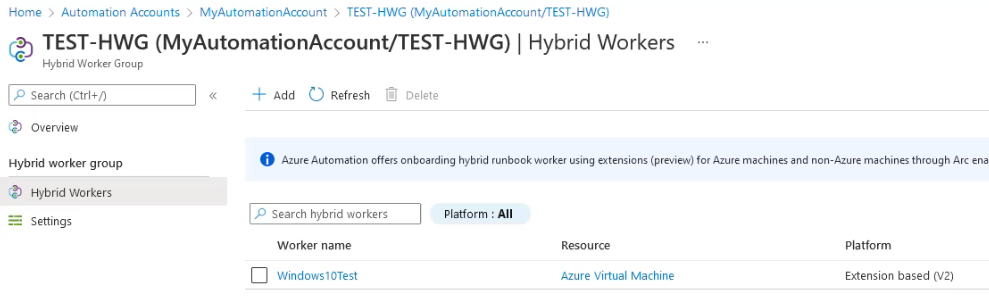

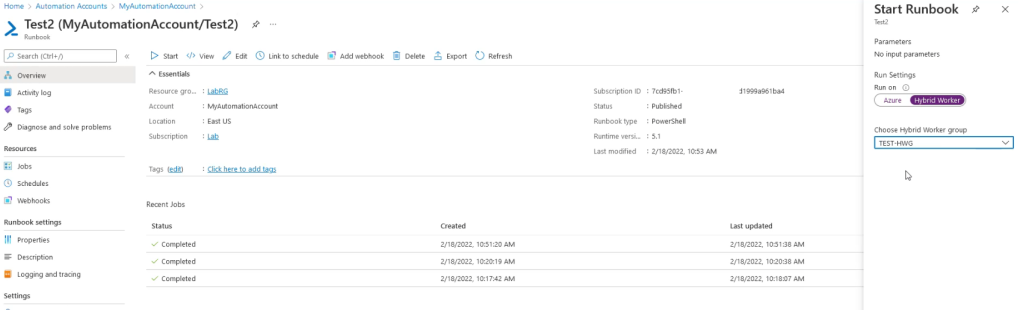

Hybrid Worker Groups (HWGs) allow a configured Runbook in an Automation Account to be ran on an Azure Virtual machine that is part of the configured HWG. Once again, an extension is used on the VM to deploy the Runbook code onto the VM. Since an extension is used, credentials are irrelevant and the code is executed as SYSTEM or root.

If using a Windows 10 VM, it’s important to have the Runbook run as PowerShell Version 5.1 instead of 7.1, as 7.1 isn’t installed on the VMs by default and the script will fail to run.

On disk logs are stored in a different path than the other extensions, and are located at C:\WindowsAzure\Logs\Plugins\Microsoft.Azure.Automation.HybridWorker.HybridWorkerForWindows\0.1.0.18

When this technique is used, two logs are generated:

- Create an Azure Automation Job

- Create or Update Virtual Machine Extension

Correlation of these events, plus correlation of the ‘HybridWorkerExtension’ in the ‘scope’ field, will suggest that a HWG is being used.

There are still additional ways of VM execution, as this isn’t comprehensive, but I feel like these are the most notable ones.